In a blog post published last week, Meta asks, “Where are the robots?” The answer is simple. They’re here. You just need to know where to look. It’s a frustrating answer. I recognize that. Let’s set aside conversations about cars and driver assistance and just focus on things we all tend to agree are robots. For starters, that Amazon delivery isn’t making it to you without robotic assistance.

A more pertinent question would be: Why aren’t there more robots? And more to the point, why aren’t there more robots in my house right now? It’s a complex question with a lot of nuance — much of it coming down to the current state of hardware limitations around the concept of a “general purpose” robot. Roomba is a robot. There are a lot of Roombas in the world, and that’s largely because Roombas do one thing well (an additional decade of R&D has helped advance things from a state of “pretty good”).

It’s not so much that the premise of the question is flawed — it’s more a question of reframing it slightly. “Why aren’t there more robots?” is a perfectly valid question for a nonroboticist to ask. As a longtime hardware person, I usually start my answer there. I’ve had enough conversations over the past decade that I feel fairly confident I could monopolize the entire conversation discussing the many potential points of failure with a robot gripper.

Meta’s take is software-based, and that’s fair enough. Over the past few years, I’ve witnessed an explosion in startups tackling various important categories like robotic learning, deployment/management and no- and low-code solutions. An evergreen shoutout here to the nearly two decades of research and development that’s gone into creating, maintaining and improving ROS. Fittingly, longtime stewards Open Robotics was acquired by Alphabet, which has been doing its own work in the category through the homegrown efforts, Intrinsic and Everyday Robots (which albeit were disproportionately impacted by org-wide resource slashing).

Meta/Facebook no doubt does its own share of skunkworks projects that surface every so often. I’ve seen nothing so far to suggest that they’re on a scale with what Alphabet/Google has explored over the years, but it’s always interesting to see some of these projects peek their heads out. In an announcement I strongly suspect is tied to the proliferation of generative AI discussions, the social media giant has shared what it calls “two major advancements toward general-purpose embodied AI agents capable of performing challenging sensorimotor skills.”

Quoting directly here:

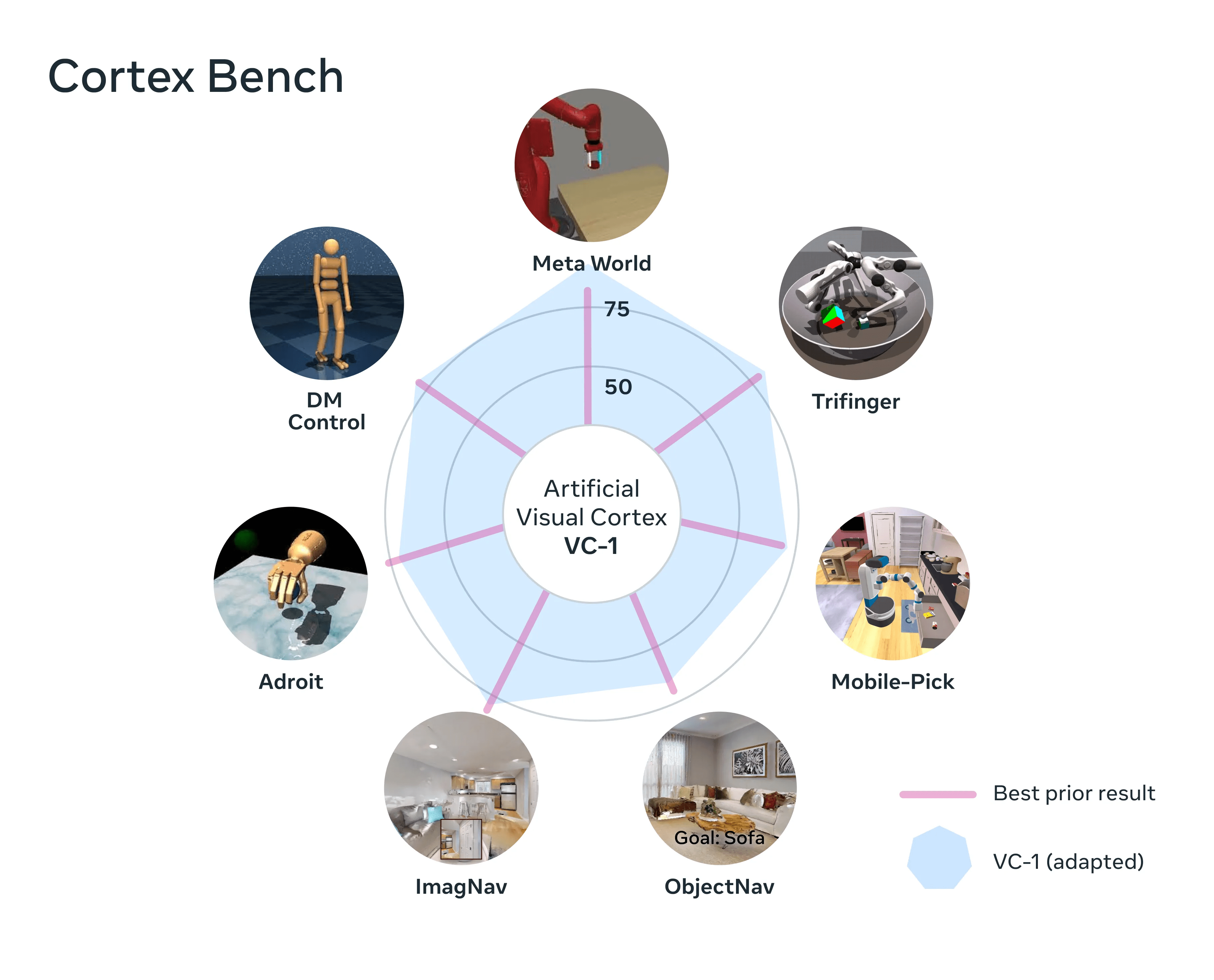

An artificial visual cortex (called VC-1): a single perception model that, for the first time, supports a diverse range of sensorimotor skills, environments, and embodiments. VC-1 is trained on videos of people performing everyday tasks from the groundbreaking Ego4D dataset created by Meta AI and academic partners. And VC-1 matches or outperforms best-known results on 17 different sensorimotor tasks in virtual environments.

A new approach called adaptive (sensorimotor) skill coordination (ASC), which achieves near-perfect performance (98 percent success) on the challenging task of robotic mobile manipulation (navigating to an object, picking it up, navigating to another location, placing the object, repeating) in physical environments.

Image Credits: Meta

Interesting research, no doubt, and I’m excited to potentially drill down on some of this, moving forward. The phrase “general purpose” is getting tossed around a lot these days. It’s a perpetually interesting topic of conversation in robotics, but there’s been a massive proliferation of general-purpose humanoid robots coming out of the woodwork in the wake of the Tesla bot unveil. For years, people have told me things like, “Say what you will about Musk, but Tesla has driven renewed interest in EVs,” and that’s more or less how I feel about Optimus at the moment. It’s served an import dual role of renewing the discussion around the form factor, while providing a clear visual to point to when explaining how hard this stuff is. Is it possible to dramatically raise the public’s expectations while tempering them at the same time?

Again, those conversations dovetail nicely with all of these GPT breakthroughs. This stuff is all very impressive, but Rodney Brooks put the danger of conflating things quite well in this very newsletter a few weeks back: “I think people are overly optimistic. They’re mistaking performance for competence. You see a good performance in a human, you can say what they’re competent at. We’re pretty good at modeling people, but those same models don’t apply. You see a great performance from one of these systems, but it doesn’t tell you how it’s going to work in adjacent space all around that, or with different data.”

Image Credits: Covariant

Obviously, I didn’t let that stop me from asking most of the folks I spoke to at ProMat for their takes on generative AI’s future role in robotics. The answers were . . . wide ranging. Some shrug it off, others see a very regimented role for the tech, and others still are extremely bullish about what all of this means for the future. In last week’s newsletter, Peter Chen, the CEO of Covariant (who just raised a fresh $75 million), offered some interesting context when it comes to generalized AI:

Before the recent ChatGPT, there were a lot of natural language processing AIs out there. Search, translate, sentiment detection, spam detection — there were loads of natural language AIs out there. The approach before GPT is, for each use case, you train a specific AI to it, using a smaller subset of data. Look at the results now, and GPT basically abolishes the field of translation, and it’s not even trained to translation. The foundation model approach is basically, instead of using small amounts of data that’s specific to one situation or train a model that’s specific to one circumstance, let’s train a large foundation-generalized model on a lot more data, so the AI is more generalized.

Of course, Covariant is currently hyper-focused on picking and placing. It’s frankly a big enough challenge to keep them occupied for a long time. But one of the promises systems like this offer is real-world training. Companies that actually have real robots doing real jobs in the real world are building extremely powerful databases and models around how machines interact with the world around them (the walls of a research facility can be limiting in this respect).

It’s not hard to see how many of the seemingly disparate building blocks being fortified by researchers and companies alike might one day come together to create a truly general-purpose system. When the hardware and AI are at that level, there’s going to be a seemingly bottomless trove of field data to train them on. I’ll admit that I did a little bit of robotic roster mixing and matching on the floor at ProMat, trying to determine how close we are given the current state of commercially available technology.

For the time being, the platform approach makes a lot of sense. With Spot, for example, Boston Dynamics is effectively selling customers on an iPhone model. First you produce gen one of an impressive piece of hardware. Next you offer an SDK to interested parties. If things go as planned, you’ve suddenly got this product doing things your team never imagined. Assuming that doesn’t involve mounting a gun to the back of the product (per BD’s guidelines), that’s an exciting proposition.

Image Credits: 1X

It’s way too early to say anything definite about 1X Technologies’ NEO robot, beyond the fact that the firm is clearly hoping to live right in that cross section between robotics and generative AI. Certainly it has a powerful ally in OpenAI. The generative AI giant’s Startup Fund led a $23.5 million round, which also featured Tiger Global, among others.

Says 1X founder and CEO Bernt Øivind Børnich, “1X is thrilled to have OpenAI lead this round because we’re aligned in our missions: thoughtfully integrating emerging technology into people’s daily lives. With the support of our investors, we will continue to make significant strides in the field of robotics and augment the global labor market.”

One interesting note on that (to me at least) is that 1X has actually been kicking around for a minute. The Norwegian firm was known as Halodi until its very recent (exactly one month ago) pithy rebrand. You only have to go back a year or two to see the beginnings take on the humanoid form factor the company was developing for food service. The tech definitely appears more sophisticated than its 2021 counterpart, but the wheeled-base betrays how much further is left to go to get to some version of the robot we see if its renders.

Incidentally, maybe it’s me, but there seems to be some convergent evolution happening here:

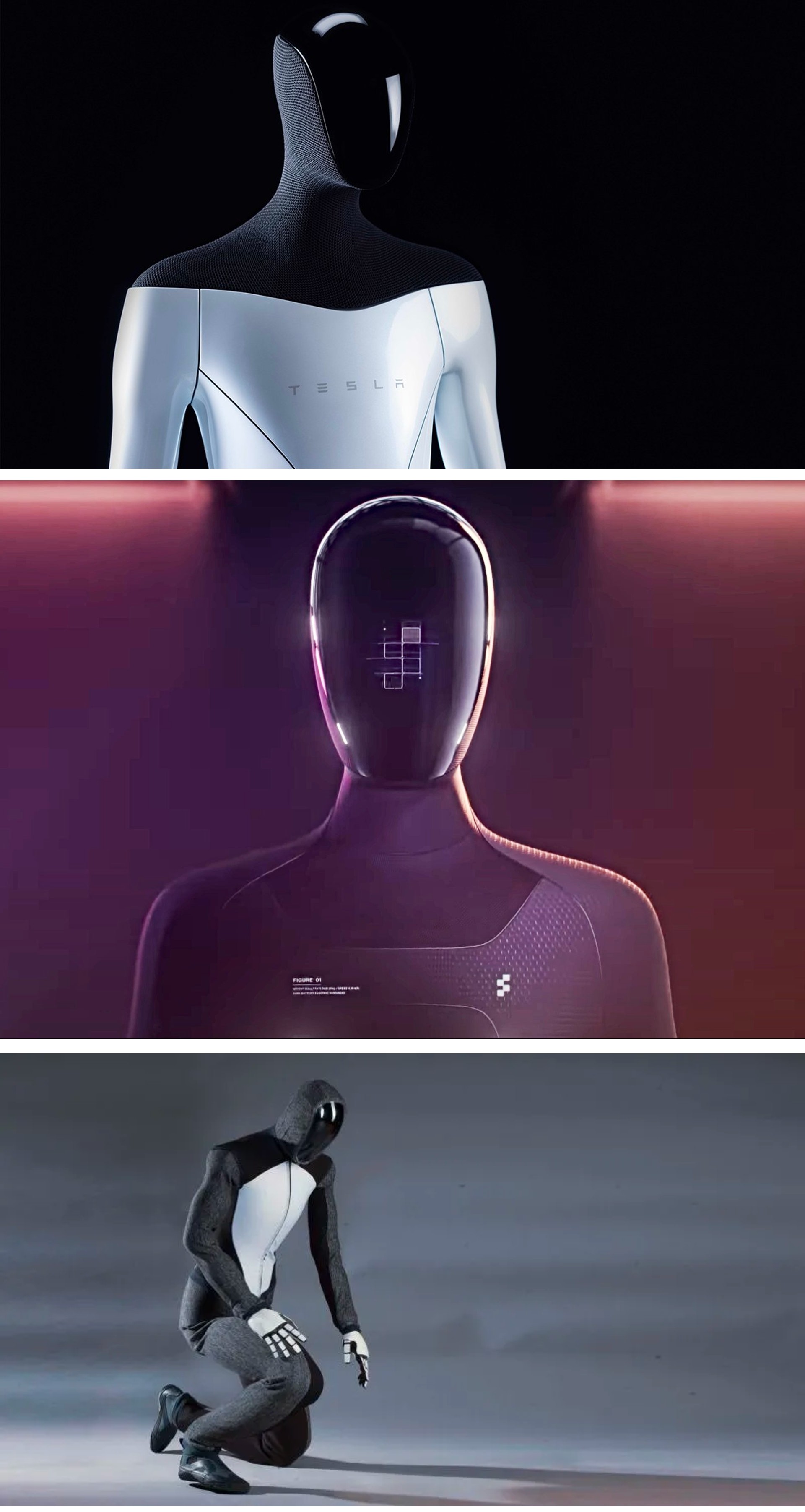

Image Credits: Tesla/Figure/IX — Collage by the author

Top to bottom, these are renders of Tesla Optimus, Figure 01, and 1X Neo. Not direct copies, obviously, but they certainly look like they could be cousins. Neo is the one that insists on wearing a hoodie, even to formal occasions. Listen, I’m not an industrial designer, but what about a cowboy hat or something?

Image Credits: MIT CSAIL

What say we finish off the week of news with a pair of research projects? The first is a fun one out of MIT. When you really think about it, playing soccer is a great way to test locomotion. There’s a reason the Robocup has been kicking for nearly 20 years. In the case of Dribblebot, however, the challenge is uneven terrain — that includes stuff like grass, mud and sand.

Says MIT professor Pulkit Agrawal:

If you look around today, most robots are wheeled. But imagine that there’s a disaster scenario, flooding, or an earthquake, and we want robots to aid humans in the search and rescue process. We need the machines to go over terrains that aren’t flat, and wheeled robots can’t traverse those landscapes. The whole point of studying legged robots is to go to terrains outside the reach of current robotic systems.

Image Credits: UCLA

The second research project is from UCLA’s Samueli School of Engineering, which recently published findings from its work around origami robots. The Origami MechanoBots, or “OrigaMechs,” rely on sensors embedded into their thin polyester building blocks. Principal investigator Ankur Mehta has some fairly far out plans for the tech.

“These types of dangerous or unpredictable scenarios, such as during a natural or manmade disaster, could be where origami robots proved to be especially useful,” he said in a post tied to the news. “The robots could be designed for specialty functions and manufactured on demand very quickly. Also, while it’s a very long way away, there could be environments on other planets where explorer robots that are impervious to those scenarios would be very desirable.”

It’s not quite the surface of Venus, but the prey-sensing flytrap is pretty neat nonetheless.

Jobs

All right, how about a fresh round of job listings? I plan to continue doing these periodically in chunks, going forward. The best way to get listed is to follow me over on LinkedIn and respond on threads where I announce a new job is coming up. This is certainly not the most efficient way to do it, but it’s been working for me, so I’m going to stay the course.

As I mentioned this week, I’m going to prioritize those who haven’t been featured before.

Robot Jobs for Human People

Aescape (14 roles)

Apptronik (20 roles)

Dexterity (18 roles)

Foxglove (3 roles)

Phantom Auto (21 roles)

Sanctuary AI (15 roles)

Slamcore (5 roles)

Woven by Toyota (4 roles)

Image Credits: Bryce Durbin/TechCrunch

Come, explore distant worlds with Actuator. Subscribe here.

The robots are already here by Brian Heater originally published on TechCrunch

No comments: